Data Flow and Analysis

All the different data produced by the detector are recorded on disk at the European Gravitational Observatory (EGO) and stored there for different periods. They can be analyzed locally but are also distributed to external computing centers (in Italy, in France, in the USA, etc.) with variable time delays (or latencies).

The main scientific analyses performed at EGO are part of the “low-latency” (or rapid) searches: Virgo and LIGO data are scanned to identify potential gravitational wave signals (the candidates) within a few tens of seconds and, after a rapid check of the quality of these data, an alert is publicly generated and sent to the astronomical community. This allows follow-up observations by telescopes of the area in the sky where the source of the possible gravitational-wave signal has been located.

All the other scientific analyses are carried off-line (meaning with a latency of weeks if not months) using resources (CPU and storage) from different computer centers worldwide. Software packages and environments are managed and distributed by the International Gravitational-Wave Observatory Network, IGWN. Depending on the characteristics of the gravitational wave signals searched, offline analyses need variable amounts of data to be performed: those looking for transient signals scan the data like the online analysis – but more in-depth and by using more information – while those trying to find permanent signals accumulate statistics as new data become available.

During an observing run, all Virgo data are copied to the two main computing centers used by the collaboration: CNAF in Bologna (Italy) and CC-IN2P3 in Villeurbanne (France). These two copies are redundant on purpose, to make sure that all original data will be preserved

Data analysis procedures

Given the variety of gravitational-wave signals (transient signals of various durations, continuous and periodic signals, stochastic combinations of individual signals too weak to be detected individually), many different data analysis methods must be used. Most of the algorithms are optimized for a particular class of signals and depend on additional factors, like the availability or not of models predicting the signal shapes, or the amount of computing power available to run a particular analysis. The main data analysis categories are:

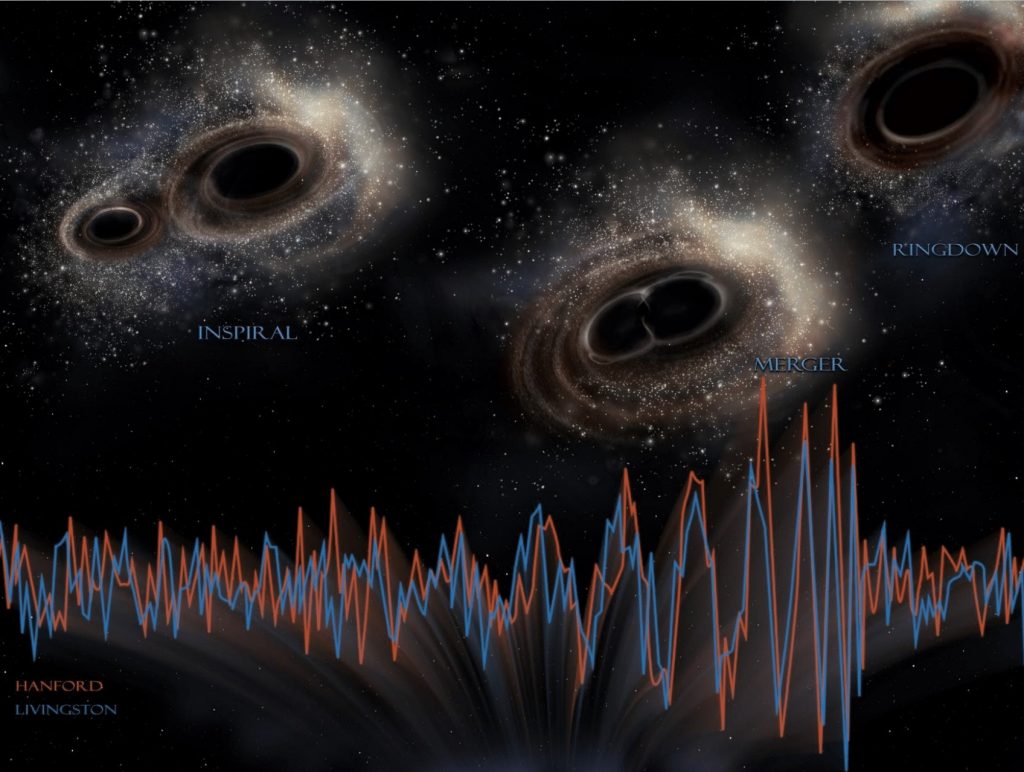

Matched filter-based techniques, for all cases in which we have accurate templates describing the signal waveform, as a function of the source parameters (component masses, etc.). This is the case of compact binary coalescence (CBC) searches.

Robust methods, scanning spectrograms to look for excess power on top of the expected noise contribution in a given frequency range, for burst searches, like supernova explosions, in which we do not have precise information of the signal waveform.

Fourier Transform-based methods for periodic continuous signals, with different implementations depending on the knowledge of the source parameters (position in the sky, gravitational wave frequency, frequency variation rate, orbital parameters in case of a binary system). The Fourier Transform of a time-series of data is a representation of the data in terms of its frequency components rather than as a time evolution.

Cross-correlation based methods, to search for stochastic signals (they look like a noise, but correlated among detectors).

The search for long transients, quasi-periodic or exotic signals can be done with appropriate extensions of the methods used for CBC, bursts or periodic waves, depending on the cases.

In addition to these, an increasing number of applications of machine learning techniques are being studied.

Once the candidate signals associated with possible gravitational wave sources are verified and are statistically significant, parameter estimation procedures are applied, in order to properly study the characteristics of the source.